This is the second part of a two-part summary of the IROS 2022 workshop Evaluating Motion Planning Performance: Metrics, Tools, Datasets, and Experimental Design. If you did not read the first part, I suggest you go there before reading this post.

In this second part, I will focus on the topics presented in the afternoon session. As stated in my first post, I might give my opinion on topics I am more comfortable with, but I will try to summarize every presentation.

Session 2: Performance and Evaluation Metrics

TL;DR

The second session focused on defining good metrics, finding appropriate parameters, and dealing with uncertainties and specific tasks where general planners may be unusable.

If you don’t want to read about each presentation separately, here is a list of my main takeaways from the afternoon session:

Hyperparameter Optimization as a Tool for Motion Planning Algorithm Selection

This talk was presented by Dr. Mark Moll from PickNik Robotics. The focus of this presentation was on how to select a suitable motion planning algorithm and the right parameters for a particular application. However, the search space for motion planning algorithms is very complex:

He proposes that hyperparameter optimization techniques, which are vastly used in the machine learning field, should help with this task. To do so, a dataset of motion planning problems and a loss function should be provided. Good loss functions should be fast to calculate and be able to differentiate good planners from bad ones. Dr. Moll used BOHB, a combination of HyperBand and Bayesian Optimization, to do hyperparameter optimization. Some of the results of his experiments are:

The code for HyperPlan is available here.

Author’s take: According to the results, hyperparameter optimization can drastically improve the performance of motion planners when compared with the default values. There are many robotic applications, so it is virtually impossible to find parameters that work well for every situation. However, if the practitioner can clearly define their problem, this approach seems promising. The code is still under development, but I believe it can become an integral part of MoveIt after a stable release comes out.

Nonparametric Statistical Evaluation of Sampling-Based Motion Planning Algorithms

This talk was presented by Dr. Jonathan Gammell from the Oxford Robotics Institute. The speaker shared best practices for the statistical evaluation of sampling-based planners. His main points were:

Author’s take: I also consider planning success to be the most important metric when evaluating a planner, as a planner with a low success rate cannot be used in critical applications. However, using it as the sole evaluation metric is insufficient. I believe the metrics should vary based on the application. For example, when evaluating the performance of a planned trajectory in an industrial environment, metrics such as clearance from obstacles, maximum joint torque, trajectory jerk, and trajectory duration must also be considered. On the other hand, in the case of Offline Programming, planning time is less critical, as there is no real-time requirement.

Comparable Performance Evaluation in Motion Planning under Uncertainty

This talk was presented by Professor Hanna Kurniawati from Australian National University. The speaker focused on the issues when evaluating motion planning under uncertainty and the efforts to overcome those issues. The key takeaways from her talk were:

Lessons learned from Motion Planning Benchmarking.

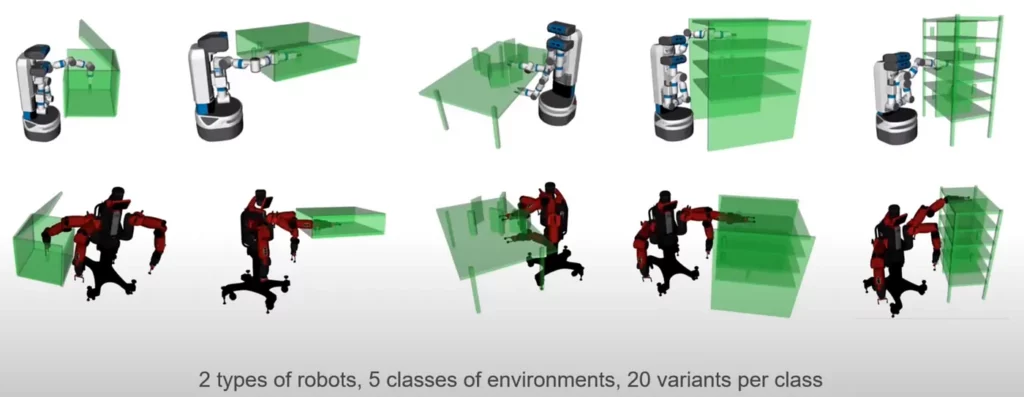

This talk was presented by Dr. Andreas Orthey from Realtime Robotics. The speaker’s main focus was that using tailor-made planning solutions is paramount when dealing with complex tasks. The key takeaways from the talk were:

Author’s take: Using specialized planners is paramount for our industrial applications. Offline Planning is a complex but well-defined problem with clear metrics that need to be optimized. Hence, Makinarocks’ OLP team also uses a custom planner which combines sampling-based planners, optimizing planners, and custom heuristics.

Panel on Performance and Evaluation Metrics

The second panel featured Dr. Jonathan Gammell, Dr. Mark Moll, Prof. Hanna Kurniawati, and Dr. Andreas Orthey. The main topics of discussion and conclusions are summarized below:

Importance of estimating the planner's performance:

Benchmarking and competitions to measure the progress of planners every year. Is it helpful or just a distraction?

Did we reach the limit for general planners, and should we focus on optimizing for specific problems?

Parting Words

The second session touched on some topics that were also present in the morning session, such as promoting benchmarks and competitions focused on path planning. Most of the presenters showed a similar view that the discriminated usage of benchmarks in research can do more harm than good, as it might discourage research focused on applications not covered by benchmarks. As stated in this series's first post, I agree that having industry-backed benchmarks and challenges makes more sense as a way to use research breakthroughs in real applications.

They also discussed whether the community should focus on tailor-made algorithms that work well on the application they are supposed to be used at or on general planners that work well on various applications. The consensus was that both approaches should be explored in parallel and can benefit each other in the long run. However, I believe that tailor-made planners are more suitable for industrial applications where an improvement in performance in exchange for the lack of flexibility is still worth it in most cases. This might change in the future when robots are able to perform several tasks in various environments, but there is still a long way to go.

Overall, I enjoyed the workshop, and I am looking forward to the next iterations. Hopefully, it can become an annual workshop where researchers and practitioners can meet and share knowledge and tools to improve the motion planning field. Moreover, I want to express my gratitude to the organizers who worked hard to make the event so enjoyable and to MakinaRocks for sending my teammates and me to IROS 2022, so we could experience firsthand the latest robotics trends.

Finally, I would like to thank you for taking the time to read this post series. Feel free to contact me to discuss the contents of this post, the OLP project, or robotics in general. Don’t forget to check our other posts covering our experience and the latest robotics trends from IROS 2022 (even if you can’t speak Korean, Google Translate can work wonders 😉).

» This article was originally published on our Medium blog and is now part of the MakinaRocks Blog. The original post remains accessible here.